Seedream 4.0 Tops Global Rankings: Redefining the Future of AI Image Generation

- Seedream

- AI Image Generator

The AI creative landscape just witnessed a significant disruption. JiMeng’s Seedream 4.0 has clinched top positions on Artificial Analysis’s global leaderboard — leading both Text-to-Image and Image Editing categories. This marks a pivotal moment: Google’s Nano Banana, long considered the industry benchmark, has been overtaken. Seedream 4.0 sets new standards in clarity, aesthetic consistency, and — notably — Chinese text rendering.

At its core, Seedream 4.0 fuses high-fidelity generation and advanced editing into a single model powered by SeedEdit 3.0, enabling native 4K outputs and the simultaneous use of up to 10 reference images. Below we break down four real-world editing scenarios that showcase what this unified approach actually delivers.

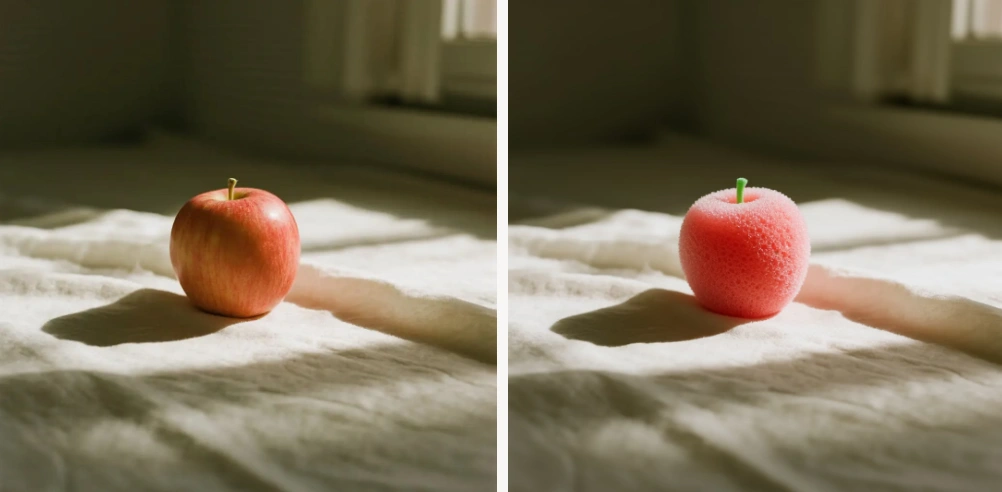

Case 1 — Lighting Transformation: “Change the scene lighting to soft light.”

Seedream 4.0 interprets lighting as an artistic variable rather than a simple filter. In practical terms, the model analyzes scene geometry, material responses, and color balance, then recalculates global illumination to produce coherent soft lighting across the frame — gentler shadows, diffused highlights, and smoother tonal transitions.

Why it matters: Traditional edit pipelines often treat lighting changes as post-process overlays that can break realism. Seedream’s integrated engine re-simulates light at a structural level, preserving texture fidelity and consistent shadow direction.

Sample prompt (user-facing): “Turn the scene lighting into soft light — reduce contrast, diffuse highlights, keep shadow directions consistent.”

Expected outcome: Images look naturally softer (as if shot under overcast conditions or through a softbox), without texture washout or mismatched shadow artifacts.

Case 2 — Perspective Shift: “Switch the viewpoint to a top-down angle.”

Changing perspective historically required re-rendering or manual compositing. Seedream 4.0 accomplishes convincing viewpoint changes by understanding scene depth, object relationships, and occlusion. When asked to switch to a top-down (bird’s-eye) angle, the model reorganizes spatial layouts, resizes objects according to depth cues, and reconstructs previously hidden surfaces — all while maintaining lighting and texture continuity.

Why it matters: This capability streamlines creative workflows: designers can quickly test composition alternatives without re-shooting or rebuilding 3D assets.

Sample prompt: “Change the camera to a top-down perspective; preserve object proportions and maintain original lighting direction.”

Expected outcome: A believable top-down composition with correct foreshortening and without jarring perspective errors or unnatural object overlaps.

Case 3 — Material Conversion: “Turn the surface texture into foam.”

Material edits require nuanced material modeling — how light scatters, the microstructure of surfaces, and how edges respond. Seedream 4.0’s material transformations show a refined understanding of physical appearance: asking for “foam” causes the model to re-render surfaces with porous, low-reflectance microstructures, softened edges, and believable subsurface scattering where appropriate. The result is not a flat texture swap, but a convincing re-materialization of objects in-scene.

Why it matters: For product visuals, advertising, and concept art, material-level edits accelerate iteration. Designers can audition tactile changes without 3D software.

Sample prompt: “Replace the object’s material with foam — add porous texture, soften reflections, keep the original form.”

Expected outcome: The object appears convincingly foamy — surface detail and light behavior match the new material, while the object’s silhouette and relation to environment remain coherent.

Case 4 — Cross-Image Content Replacement: “Replace the clothing in Image 1 with the outfit from Image 2.”

This is where the unified model architecture truly shines. Cross-image replacement demands correspondence mapping (which part of Image 2 maps to which part of Image 1), style transfer, and semantic consistency. Seedream 4.0 can ingest multiple references (up to 10), identify the target garment in one image, and recompose it onto a different subject while preserving pose, lighting, and fabric deformation. The model also reconciles color grading and shadowing so that the transplanted clothing appears native to the target photo.

Why it matters: For fashion editors, marketers, and visual storytellers, this replaces laborious manual compositing with a single, instruction-driven action.

Sample prompt: “Swap Image 1’s outfit with the garment from Image 2; keep Image 1’s pose and lighting.”

Expected outcome: A seamless clothing transfer where drape, shading, and contact with the body look natural — not pasted-on or out of context.

The Technical and Market Implications

Seedream 4.0’s combination of native 4K generation, multi-reference understanding (10 images per pass), and an in-model editing engine (SeedEdit 3.0) addresses three long-standing pain points: fidelity loss between generation/editing tools, weak handling of non-Latin scripts (notably Chinese typography), and limited multi-reference compositional reasoning. By eliminating tool handoffs and running both generation and complex edits inside one coherent model, JiMeng reduces artifacts, preserves aesthetics, and shortens iteration cycles.

From a market perspective, Seedream’s superior Chinese rendering is especially consequential. Many global models struggle with non-Latin text placement and stroke fidelity; Seedream’s performance here opens direct opportunities in Asia-Pacific markets where bilingual, culturally accurate visual content is critical.

Conclusion

Seedream 4.0 is less an incremental update and more a structural shift — a single-model approach that merges high-end synthesis with precise, instruction-driven editing. The four scenarios above (lighting, perspective, material, and cross-image replacement) are practical demonstrations of what unified visual intelligence can deliver: faster iteration, higher realism, and cross-lingual competence. For creators and enterprises pushing boundaries in visual content, Seedream 4.0 is a clear signal that the next wave of AI imagery will be both more capable and more context-aware.

How to Create a Viral AI Halloween Photo Video on TikTok

Oct 27, 2025

How to Create a Viral “AI Baby Calling Dad” Video on TikTok

Oct 28, 2025

How to Create a Viral AI Video Like the Singing Cat on TikTok

Oct 28, 2025

How to Create a Viral Instagram Pet Lip Sync Video Like the Adorable Cat on a Finger Saying "Not Good"

Oct 23, 2025

Cracking Design Challenges with Nano Banana: Smarter Marketing Made Simple

As a founder and part-time AI enthusiast, every time a big tech company releases a new model, I can’t resist testing it out. I want to understand its strengths, limits, and real-world potential — and most importantly, how it can boost my business by saving time while improving creative output.

By Skylar 一 Oct 29, 2025- Nano Banana

- AI Image Generator

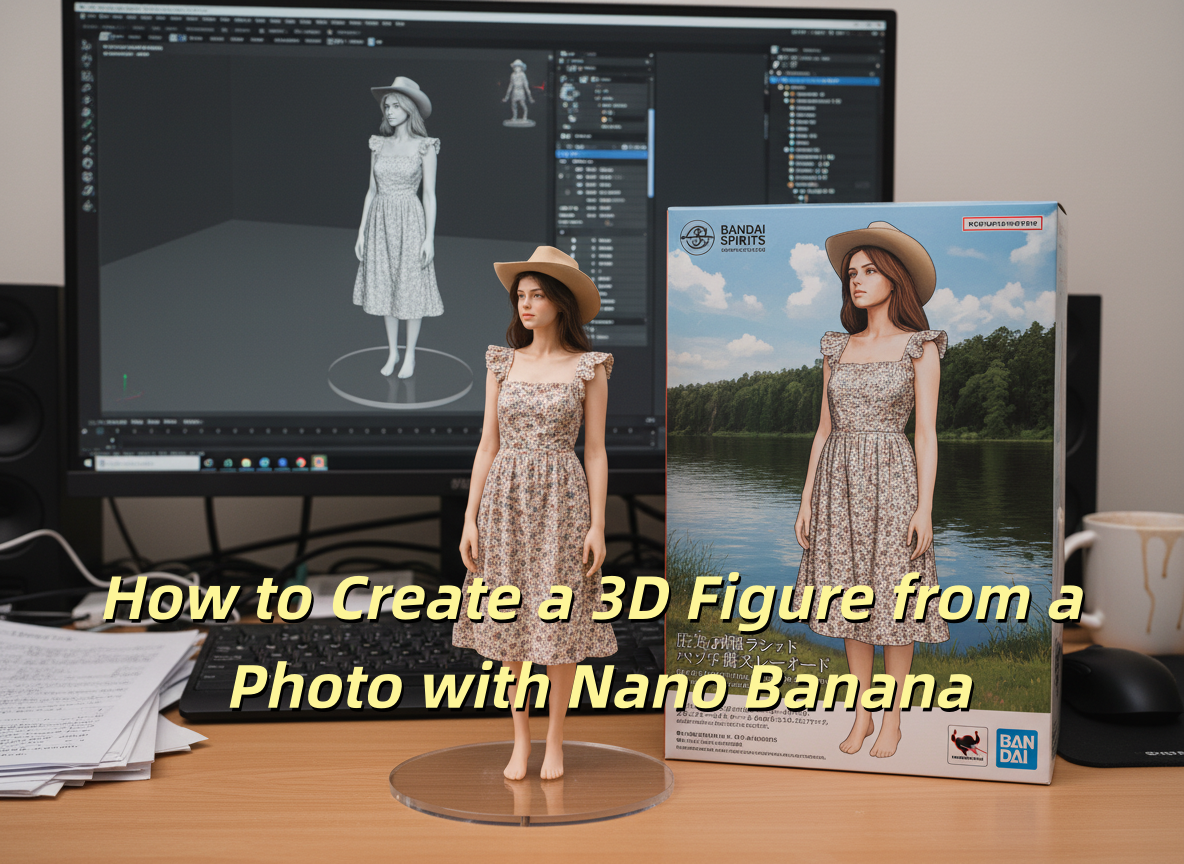

How to Create a 3D Figure from a Photo with Nano Banana

With Nano Banana, you can transform a simple image into a detailed, 3D figurine-style model. Whether you’re looking to turn a portrait into a stylized figurine or create a fully custom 3D model, Nano Banana offers a simple and efficient solution for artists, designers, and enthusiasts alike.

By Skylar 一 Oct 29, 2025- Nano Banana

- AI Image Generator

How to Use Google Nano Banana: Revolutionizing Your Photo Editing Experience

Google Nano Banana offers an incredible suite of AI-driven features that allow you to generate and enhance images effortlessly. From turning casual photos into professional ID pictures to restoring old, damaged photos with stunning accuracy, this tool is designed to meet a wide range of needs.

By Skylar 一 Oct 29, 2025- Nano Banana

- AI Image Generator

- X

- Youtube

- Discord